Automation and artificial intelligence regularly make headlines, and these topics raise many interesting questions. Unfortunately, the answers usually provided prove very shallow.

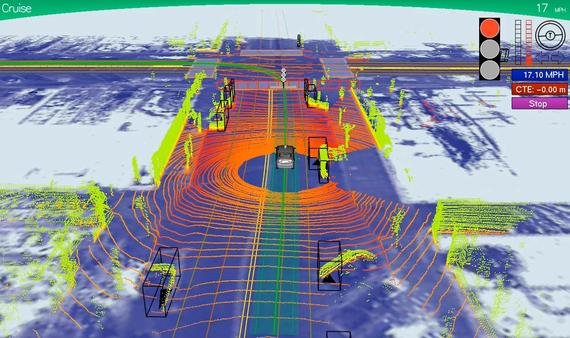

An example of such questions is: how will driverless cars make life-or-death decisions?

A classical but unsatisfactory answer (which the article above quickly

rushes into, of course) lies in asserting that programs are not “moral agents” and then in dismissing

the question, replacing it by asserting that the moral decision is made by the

programmer when picking which algorithm or protocol to implement.

While this is true, it silently avoids the moral question of whether a

decision-maker (or agent) ‘should’ be a “moral agent”

before being authorised to drive! And funnily enough, there's a minimum

age for driving in most countries, which might indicate that we require human

decision-makers to be grown up enough to be moral agents, responsible for

their decision, before they're allowed to drive (the fact that this is a

moral issue is indicated by the fact that states usually do not prevent you

from endangering yourself with dangerous driving —e.g. on closed

private circuits, no speed limit— but forbid from endangering others by

your dangerous driving, possibly dangerous by the mere fact that it's

unpredictable by others). Why would silicon-based decision-makers benefit from

less stringent requirements on being capable of moral responsibility?

Another classical but unsatisfactory answer (which the article above quickly bumps into, next) lies in asserting that life-or-death hypotheticals “are not accurate portrayals of what system needs to think about”. Yet, we praise humans who do think about these; for example, Marine Capt. Jeff Kuss' F/A-18 Hornet crashed shortly after takeoff and this pilot was considered a ‘hero’ because, in order to prevent his aircraft from hitting into an apartment building, Kuss purposefully did not eject so that he could manoeuvre the aircraft away from the building! If we introduce automation on the basis of improving things, then we ought to consider why we praise humans who manage life-or-death hypotheticals and act selflessly.

And, of course, one of the weakest answers is to ignore the debate, simply asserting that consumers wouldn't buy cars which could decide to kill them (even if they'd happily support the idea of others buying self-sacrificing cars, or even of legally enforcing self-sacrifice) and, therefore —for the sake of big business,— let's make sure self-driving cars are ‘selfish.’ This is weak because consumers routinely pay to take risks (e.g. when practising some sports), routinely allow risks (e.g. relinquishing the monopoly of violence to a government), and because ignoring moral concerns for the sake of profits isn't a valid moral answer.

One of the best answers to the moral conundrum is based on statistics. It

states that it would be unethical to delay self-driving cars, because they

offer the perspective of fewer accidents. This doesn't deny imperfection,

but it compares it to the imperfections of human drivers, which is a reasonable

baseline. In a sense, this is a direct application of utilitarian ethics to

a ethics-agnostic technical proposal (one can compare this to the introduction

of another imperfect but valuable technology: airbags).

There are however two difficulties. Firstly, the statistics are not in favour

of self-driving just yet: based on reports, Google's car had 13

crash-likely incidents (when the operator felt the need to disengage the

system) i.e. one accident every 74,000 miles, which is pretty

impressive for a piece of software out in the real world. Yet, Americans log

one crash per 238,000 miles! Yes, human drivers cause more than 90% of the

crashes that kill more than 30,000 people in the US every year, but they

still outperform self-driving for now!

Secondly, statistics are naturally backward-looking while decision-making

is forward-looking: statistics are reliable guides when the context is

stable, but they're not necessarily good predictors when the situation

quickly changes (e.g. because of the mass introduction of self-driving

cars, from different manufacturers, with different driving policies):

training a self-driving car to anticipate the decisions of human drivers

around does not automatically transpose into

anticipating correctly how another self-driving car, from a different

manufacturer, will behave.

Automatic-pilot already has a long history… in planes

One of the best (open-access) articles written to date about the potential adoption of self-driving is Casner, S. M.; Hutchins, E. L.; Norman, D: “The challenges of partially automated driving”. Communications of the ACM, 59(5), 70–77 (2016). Its discussion of “levels to describe the degree of automation” could be useful to many, and so is the list of concerns (caused by automation) to keep in mind: from inattention, brittleness, trust, quality of feedback and skill atrophy (already observed with the introduction of GPS), to complacency, nuisance alerts and short timeframes (already observed with the introduction of driving guidance), etc. Particularly important is the difficilty for humans to take control on short notice: the time needed to grasp the context, in order to take an appropriate decision, is substantial. This implies that switching control back to humans when the self-driving system doesn't know what to do is an unsatisfactory strategy.

The article contains one key anecdote worth highlighting for the rest of this discussion: although [many] examples make a solid case for “automation knows best,” we have also seen many examples in aviation in which pilots fought automation for control of an aircraft. In 1988, during an air show in Habsheim, France, in which an Airbus A320 aircraft was being demonstrated, the automation placed itself in landing configuration when the crew did a flyby of the crowd. Knowing there was no runway there, the flight crew attempted to climb. Automation and flight crew fought for control, and the autoflight system eventually flew the airplane into the trees. In this case, the flight crew knew best but its inputs were overridden by an automated system. Now imagine a case in which the GPS suggested a turn into a road that has just experienced a major catastrophe, perhaps with a large, deep hole in what would ordinarily be a perfectly flat roadway. Now imagine an automated car that forces the driver to follow the instruction. In such cases we want the person or thing that is indeed right to win the argument, but as human and machine are both sometimes fallible, these conflicts are not always easy to resolve.

The all-too-common fallacy

A fallacy which plagues most debates about moral agency and freedom is extremely frequent, even though we already have the necessary concepts and tools to know that it's a fallacy! It can be summarised thus: “if we have enough information and everything is clear, then no real decision is ever needed. The choice is obvious.”

The error consists in believing that the optimisation problem of “taking the best decision” is simple enough to have a unique global optimum: this would make the choice ‘obvious’.

Of course, anything labelled ‘obvious’ often points to a “common sense” fallacy, a variation of the “argument from incredulity” fallacy, a variation of the “argument from ignorance”.

I'd argue that the problem is rich enough (even within a ‘perfect’ information setup, if the available information is finite: i.e. even when what you know is true, as long as you don't know everything) for its solution to be a Pareto boundary rather than a single point!

Once we accept that there most likely is a set of equally desirable options, constituting a Pareto boundary, philosophically we're reaching toward the famous paradox of Buridan's ass.

Such a situation implies that choice and/or randomness remains a key necessity, in order not to get stuck when facing a set of “equally good” solutions! It is informative to note that metastability issues in digital logic (a physical case of Buridan's ass) may often be solved by some (self-)monitoring and an arbitrary choice of output whenever metastability is detected.

Now, a choice might be based on other problems at hand: we might

choose a solution to the first optimisation issue, e.g. based on how it

positions us to solve the next issue.

If this “next issue” is known in advance, the associated

constraints might be included during the first problem resolution. But

I'd argue that, regularly, due to lack of omniscience, humans would only

see this next issue once they can relax their attention from the first

resolution: having some idea about the various solutions to the

first problem, concentration may be relaxed and the awareness can open up,

to find out (there and then) what might come next… What is called

‘inattention,’ in relation to driving, often is linked to

some over-concentration on something (e.g. attempting to avoid an

obstacle) and a too-feeble awareness that another constraint is arising and

needs to be dealt with (e.g. another car changing lane at the same time).

Buridan's ass could rationally choose to randomly pick

hunger or thirst as a first need to satisfy, so that satisfying the second need

—thirst or hunger, respectively— becomes easy.

This replaces a false dichotomy (hunger-or-thirst)

by a series of problems to solve: one picks either

hunger-then-thirst or thirst-then-hunger. As both series are of equal easiness, there's a risk

of being stuck again (not knowing which series to go for), but by looking to

solve both hunger and thirst rather

than only one, some solutions which would have appeared irrational at first

now might be considered perfectly rational: for example, relying on

arbitrariness or randomness to pick one series among the two!

The global perspective (i.e. tackling a Pareto boundary within a context) ends up easier to solve than the

obsession on an isolated problem, which is not surprising: the global

perspective does better capture reality than the assumption that a unique

global optimum ‘should’ necessarily exist; and if a model better

captures reality, it often is more helpful to deal with reality!

Maybe it's not even about the position in which we are to solve the next problem (e.g. in terms of resources left available) but about what is the next problem we want to tackle (among many of “equal urgency”, another Pareto boundary when picking what to attend to next).

In Buddhist terms, it is considered that personal views and opinions do blind sentient beings: believing that it's “either hunger or thirst but not both” can get the ass stuck, when a wise non-dualistic reframing would prove beneficial in the context at hand. A solution arises not out of the properties of alternatives (since they're assumed identical) but out of reframing. The partisans of determinism might argue that the reframing itself is conditioned: e.g. a Buddhist might reframe the situation differently from a Christian. But believing in such a determinism would be assuming that one has to decide how to act based on the first reframing that proposes a solution, and that people only have very shallow identities (as if being Buddhist somehow prevented from e.g. knowing Christian perspectives). Given the possibility of restraint or of veto, and the infinite variations possible in terms of reframing, in fact we're still back to a choice: a choice about when it is time to stop reframing and to consider whatever solutions might have been reached so far.

Freedom and choices still have a role to play.

The above should hopefully illustrate a key difference, for now, between self-driving cars and human drivers. Based on the articles published, it seems self-driving cars are currently programmed on the assumption that there exists one optimum in each situation. Usually, the system is seeking a local optimum, good enough, which can be computed quickly and efficiently… rather than a global optimum… This avoids the Nirvāṇa fallacy (or perfect solution fallacy) —when solutions to problems are rejected because they are not perfect— but the review of the performance of the algorithm is still plagued by the idea that there was one ‘best’ answer. Sure, the algorithm relies on iteration, so that an imperfect answer might get corrected the next moment, but it's still about doing the (one) best from moment to moment: no choice needed.

This said, should a problem occur, we would analyse it by asking ourselves

what other choice could a human have made?

Maybe we'll phrase it in terms of choice by the programmers of the car;

maybe we'll phrase it in terms of average human drivers; maybe we'll

phrase it in terms of professional driver (with a “these stunts are

performed by trained professionals, don't try this at home” warning,

but also with the hope that automated driving could reach the performance

of the best humans, not merely that of the average humans); maybe we'll

phrase it in terms of societal choices and legislations! So we revert back to

choices.

And we also consider which problem is the most urgent to solve! It's not

that we have a great track-record on this particular question though (we seem

happy with upgrading Facebook before solving world hunger)… but this

points back to a multi-criteria optimisation with imperfect information,

resulting in a Paretto boundary and a call for tough choices!

In Free Will and Modern

Science, Tim Bayne once concluded a review of Libet and of the case for

free will scepticism with a reversal of perspective:

“Rather

than asking whether the sciences of human agency undermine our commitment to

free will, we might instead look to them for insights into the nature of free

will. What is it about human cognitive architecture that provides us with the

capacity for free agency? How can the domain of human freedom be expanded? How

can impediments to the exercise of free will be removed?”

Instead of perpetuating false dichotomies (body vs. mind, determinism

vs. perfectly unconstrained freedom…) and pitting one view against

the other (biology vs. psychology vs. philosophy…), what about

articulating them into a richer perspective, with its various facets being

employed based on appropriateness to the context? If we are capable of

combining various facets (wave and particle, classical or statistical

representation of thermodynamics, phase transitions…) in physics,

there's no fundamental reason we couldn't do the same while studying

agency.

Back to ethics… and to Buddhism?

The conundrum of Buridan’s ass also applies to ethics. If one caricatures

one's ethical responsiblity as “given possible alternatives,

I should always choose the greater good”, what happens when two

alternatives are judged equally good? Buridan himself concluded that no

rational choice could be made and that we should suspend action or judgment

until circumstances change.

As the reader has probably understood by now, this is just kicking the can

down the road in some way and yet it might also point towards a solution.

It merely replaces one problem with another, in that the very judgement that

two alternatives are equally good is subject to Buridan's paradox: you might

have as many reasons to believe they're identically good as you might

have to believe there's the tiniest of differences (notably once you

put things back into context, instead of obsessing about only the first problem

at hand).

Yet, suspending action or judgement might also sound 1. as gaining an

opportunity for things to change (but that's most likely pointing to a

context and not things “in and of themselves”), 2. as a veto, a

willful restraint from acting, regardless of the preceding urge or the

readiness to act (which offers echoes with Libet's experiment).

One of the limits to Buridan's position is that “suspending judgement” might get the ass to ‘rationally’ die. This places no value on life itself, as an opportunity to learn, to grow, to flourish, to do good, to do right… a position which might be ethically debated (the unexamined life is not worth living… maybe dying is equally preferrable to merely being a victim of one's impulses and lack of discernment) and might call for choices.

And so acting ethically (and driving ethically) might call for us not to blindly subscribe to Spinoza's view in Ethics that there is “no absolute or free will; but the mind is determined to wish this or that by a cause, which has also been determined by another cause, and this last by another cause, and so on to infinity.” Spinoza's contribution, here, supposedly was that will and understanding cannot be separated (‘supposedly’ because such non-separateness was so key to Buddhism —within the saṅkhāra aggregate, and in relation to Dependent Origination— that it takes someone obsessed with European philosophy but ignorant of Asian philosophy to see this as something new from Spinoza… Yet Buddhism sees this as a trait of the ignorant mind, a trait not shared with nibbāna).

In Buddhist terms, when you regress a causal chain, you cannot find an origin, indeed… but you cannot then pretend that things are deterministic! To reach a conclusion of determinism is a fallacious appeal to ignorance (we don't identify causes but let's pretend we nonetheless know that the constraints they create are absolute). Buddhists are called to suspend judgement on freedom, to act as wisely as they can (be it determined by what the situation calls for, without personal blind spots, preferences, biases, prejudices… or by Buddhist guidance, precepts, etc.) and not to cling to views about whether freedom exists or not!

It was previously said that Buridan's position places no value on life itself, as an opportunity to learn, to grow, to flourish, to do good, to do right… Reframing the situation to include a future value might seem weird, but in the causal analysis, it's hard to escape the fact that often we do act in order to create some desired consequence, i.e. our acts are potentially caused by future outcomes. Naturally, the skeptic immediately rejects the inversion: it's not the future that informs the present decision, but the present anticipation and expectation (about the future), themselves determined by the past. In Buddhist terms, this again is a call to ignorance: if you cannot demonstrate how the past determines the present, you cannot shift the burden of proof asking others to prove that our ‘potential’ is not itself participating in calling us, in causing us to act to meet it. In Mahāyāna terms, this is considered very seriously, under the label of “Buddha-nature.” And in fact, the (present) call of our (future) potential was also considered, under the label of “original Enlightenment”!

Buddhism puts a lot of emphasis on causality, but avoids the logical fallacy

of turning an infinite (indeterminate) regress into determinism.

Buddhism also promotes a path of restraint, based on precepts and

disenchantment leading to renunciation… which agrees that much of our

desires and aversions are conditioned, but also agrees that freedom might

primarily lie in the capacity to veto, the ability to not let impulses decide

for us.

And Buddhism also puts a lot of emphasis on ethics and personal responsibility,

under the name of karma: its questions put

us, and our decisions, into a wider context (trusting that awareness is a key

to the cessation of ignorance, a key to wiser, better, more wholesome

decisions… prefering an engagement with Pareto boundaries and wider

perspectives to a narrow-minded obsession and a view that reality

‘should’ comply —or be made to comply— with our

wishes).

Creatively engaging with a wider sets of inter-dependent problems

might potentially constrain some decision, doing what's called for might

at times appear as increasingly determined by the context and therefore the

opposite of freedom… yet Buddhism is not afraid of losing one's

freedom, once it is acknowledged to exist primarily as a form of restraint,

and it prefers the utilitarian perspective which states that losing options for

the sake of doing what's best for all sentient beings is far more ethical

than losing options for the sake of living under the dictature of one's

selfish and unexamined impulses!

All of this might seem irrelevant to the current discussions and research

about self-driving vehicles, and yet maybe we need the decision-making part

of these vehicles to reach a point where they decide not to drive! A nice

short-story expressed it in reverse terms (where the car actively decides

to kill the driver): autonomy by Grey Drane.

And maybe we need to consider not to give in to our own impulses to want more, want more, want more. Automation for the selfish benefit of

having amazon deliveries within the hour is not necessarily a good economic

strategy, but it's also not ethical behaviour when it costs the jobs of

our co-citizens who cannot compete with robots. It might be made into a

wholesome preference once mitigation strategies for laid-off workers are also

designed and implemented, but not before that: maybe the ethical problem has

to be considered on par, or even above, the technological problem. Otherwise,

once again, we selfishly, narrow-mindedly and blindly favour upgrading Facebook

(or Amazon) over solving world hunger and gender equality: this is not the

future we would choose if only we took a step back (for a single moment of

awareness) from the treadmill, from the keeping up with the Joneses, from

the marketing manipulations and artificially created needs.

One key aspect of ethics is in taking responsibility for one's actions. Of course, this is a serious matter for the industry itself: in case of accident, is the owner of the car responsible? Or the manufacturer, since the owner had no say in the course of action? Does it even matter, as long as insurers cover the damage? An humourous take by Robert Shrimsley on this question is Google car crashes: the fast and the firmware, written right after an actual accident between a google self-driving car and a bus was reported: it starts with the dialogue “—You drove into that bus. —Negative, I merely failed to anticipate the stupidity of the human.” Like all good satire, some underlying currents might prove too close to reality to be comfortable.

Buddha-nature is the potential to wisely choose, wisely pick. Such a potential cannot be grasped, because reasons and determinacy constitute what's discernible, graspable, expressible, understandable, while wisdom (free from prejudices, views, opinions, tendencies, causal determinism) is what cannot be described in advance, only its manifestations in hindsight might be described… and yet it also exists as the solution to navigate an infinitely rich, contingent context.

Optional technical section:

The issue of navigating (i.e. making choices) infinitely rich contexts is explicitly considered in mathematics, with the axiom of choice (which may only bring value when faced with infinite collections). This axiom allows us to state that a choice is possible, without having to show how: this is important because, when a procedure (to choose) would require an infinite time to run, i.e. an amount of time we don't have, it allows to nonetheless move on to the next step, rather than to stay stuck (with either an incomplete or an indeterminate set of chosen elements).

If one was to deliberately take a decision in life while adhering to the tenets of determinism, then not only should one consider one's reasons to take a decision but, in order to assess their validity, one should also consider how these reasons came to be… in an infinite regress. One has indeed to stop the regress at some point, but there cannot be any rational reason to do so prior to examining the causes of the prospective stopping point: it's, by construction, arbitrary.

By rejecting permanency, Buddhism de facto rejects finitude. This is more compatible with science than most people believe: for example, the four fundamental forces of physics (gravitational, electromagnetic, strong nuclear, and weak nuclear) arose early in the history of the universe, but they may have arisen from only one fundamental force earlier on (gravity separates at 10-43s., the strong force separates at 10-35s., the weak and electromagnetic forces at 10-11s.)! If even these fundamental laws are considered impermanent, then the possible variations might be endless. And since the big bang is unlikely to be a singularity (unlikely because when we regress the history, we reach a point at which temperature prevents further regression), we might also need to consider an infinite past, rather than the classic 13 billion years that is often proposed as scientific but is too simplistic (cf. Etienne Klein's talk on can we think the origin of the universe —turn subtitles on).

If we navigate infinities, then the relevance of choices and freedom might not be discarded so easily. If it cannot be proven, it might still need to be postulated in order to reflect our reality as well as possible.

We dont't need to obsess about moral agency and about the existence of

freedom (or lack thereof), but we cannot discard these questions lightly

either. Be it about self-driving cars, or our own desire/aversion for their

arrival (with their side-effects).

Buddhist philosophy is relevant in today's world, and while it focuses

much on causality, it doesn't discard the possibility of a “freedom

from conditioning”: nibbāna. And, in

Mahāyāna, it doesn't even separate this

nirvāṇa from the conditioned world or saṃsāra: freedom and the ability to choose might indeed

be integral to living in an infinitely entangled world (in particular if one

aims to live wisely, maybe thanks to the freedom that may arise, when some

spaciousness is created in lieu of running and chasing, rendered possible

thanks to veto and self-restraint)!

Food for thought: would you choose to live under the dictatures of unexamined

impulses? Or would you consider exploring another perspective?